Amazon Managed Streaming for Apache Kafka (MSK) is a fully managed service that simplifies setting up and running Apache Kafka clusters. Kafka is a popular open-source platform for real-time data streaming, event processing, and data integration tasks, but managing and scaling Kafka clusters can be resource-intensive. With Amazon MSK, engineers and developers can focus on building data streaming applications without worrying about the complexities of deploying, scaling, and maintaining their Kafka infrastructure.

In this article, we’ll explore the key features that make Amazon MSK the tool for managing Kafka workloads. We’ll discuss seamless integration with other AWS services, and comprehensive monitoring capabilities that help streamline data.

What Amazon MSK is and How It Works

Amazon Managed Streaming for Apache Kafka (MSK) is a fully managed service designed to simplify the operation of Apache Kafka clusters for streaming data applications. Apache Kafka is a popular open-source platform known for its ability to handle high-throughput, real-time data streaming, often used for event processing, analytics, and data integration. However, setting up and maintaining Kafka clusters requires configuration, scaling, and fault tolerance expertise. Amazon MSK eliminates this complexity by doing the heavy lifting, allowing engineers to focus on application development instead of infrastructure management.

How Amazon MSK Works

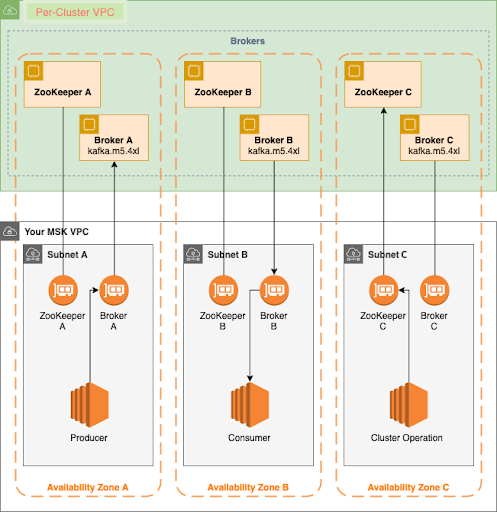

Diagram “How Amazon MSK Works”

This diagram provides a visual representation of how Amazon MSK (Managed Streaming for Apache Kafka) operates. It showcases the per-cluster VPC (Virtual Private Cloud) structure, broker and ZooKeeper instances, and how different components interact across multiple availability zones.

Key components and how they work

- ZooKeeper Nodes:

Each Kafka cluster requires a ZooKeeper ensemble to manage broker metadata and orchestrate leader election. In this diagram, there are three ZooKeeper nodes, each placed in a separate availability zone (A, B, and C). They provide high availability for Kafka metadata management.

- Kafka Brokers:

Kafka brokers are the core components that handle data streams. They receive, store, and forward data based on consumer and producer requests. In this setup, each broker instance (A, B, and C) runs on separate EC2 instances with specific instance types (kafka.m5.4xl), spread across three different availability zones for fault tolerance.

- Subnets and VPC:

The Kafka cluster operates within a Virtual Private Cloud (VPC) for secure network isolation. Each broker is in its subnet, ensuring redundancy and allowing traffic segmentation between different services.

- Producers and consumers:

Producers send data streams to the Kafka brokers, and consumers read from the brokers. In the diagram:

The Producer component is shown in Availability Zone A, feeding data into Broker A.

The Consumer component is illustrated in Availability Zone B, fetching data from Broker B.

Cluster operation:

The Cluster Operation component is in Availability Zone C and serves as an additional consumer for operations like monitoring, data analytics, or auditing.

- High availability and fault tolerance:

By distributing brokers and ZooKeeper nodes across multiple availability zones, Amazon MSK ensures that the streaming service remains highly available and fault-tolerant. This design minimizes the impact of hardware failures or connectivity issues, as traffic can be redirected to healthy brokers in other zones.

Key Features of Amazon MSK

Amazon MSK simplifies the complexities of deploying and managing Apache Kafka by providing a highly reliable and scalable platform for data streaming. It eliminates the challenges of configuring and maintaining Kafka clusters, allowing data engineers to focus on building robust streaming applications. Let’s take a closer look at the key features that make Amazon MSK a valuable solution.

Fully managed service

MSK automatically handles tasks like provisioning, configuration, and patch management, ensuring that your Kafka clusters are up-to-date and running optimally. Routine maintenance and updates are done seamlessly, reducing downtime and ensuring clusters remain secure.

High availability and fault tolerance

MSK distributes Kafka brokers and ZooKeeper nodes across multiple availability zones for redundancy. This design provides fault tolerance, allowing the system to withstand zone-level failures by routing traffic to healthy brokers without affecting data availability.

Security

MSK employs a range of security features, including encryption for data at rest and in transit, and network isolation through Amazon Virtual Private Cloud (VPC). Integration with AWS Identity and Access Management (IAM) enables granular access control, while native Kafka authentication mechanisms can also be configured.

Monitoring and logging:

With built-in integration to AWS CloudWatch and CloudTrail, MSK enables real-time monitoring and logging of broker health, throughput, and latency metrics. Enhanced monitoring allows for the collection of detailed Kafka metrics, while logs can be stored in Amazon S3 or Elasticsearch for analysis.

Scalability

MSK allows automatic or manual scaling of partitions and brokers, ensuring consistent performance during traffic spikes. It helps achieve a balance between resource costs and throughput by scaling clusters according to workload demands.

Integration

MSK supports the native Kafka APIs, enabling easy migration or integration with existing Kafka applications. MSK clusters work seamlessly with other AWS services like AWS Lambda for event-driven processing, Amazon S3 for data storage, and AWS Glue for schema management.

Backup and recovery

By providing cross-region replication and redundancy, MSK ensures that your streaming data is securely stored and recoverable. Kafka brokers automatically replicate data across partitions, ensuring that your data is protected against hardware failures and disasters.

Setting Up Amazon MSK

Setting up Amazon Managed Streaming for Apache Kafka (MSK) necessitates a comprehensive understanding of the various steps, configurations, and network setups involved. It’s essential to ensure that each phase is meticulously executed to achieve an optimized, secure, and scalable Kafka environment tailored to your specific data streaming requirements. To help guide you through the process, we’ve put together an in-depth guide that provides detailed instructions, code snippets, and best practices by Data Engineer Academy.

1. Prerequisites

Before setting up your MSK cluster, ensure you have the necessary resources ready:

AWS Account: An active account with the required permissions for managing MSK, VPCs, and subnets.

IAM Permissions: An IAM user or role with permissions for creating MSK clusters and managing other AWS resources.

Network Planning: Plan your VPC subnets and CIDR blocks to avoid IP address conflicts.

2. Creating a VPC and subnets

Your Kafka brokers will operate within a Virtual Private Cloud (VPC) to isolate them from public networks.

Steps:

Navigate to the VPC Dashboard on the AWS Management Console.

Click Create VPC and specify details like CIDR block, tenancy, and tags.

Create subnets in at least two different Availability Zones to ensure fault tolerance and high availability.

Attach an internet gateway if needed and configure route tables.

Sample Code (CloudFormation):

Resources:

MyVPC:

Type: "AWS::EC2::VPC"

Properties:

CidrBlock: "10.0.0.0/16"

EnableDnsSupport: true

EnableDnsHostnames: true

SubnetA:

Type: "AWS::EC2::Subnet"

Properties:

VpcId: !Ref MyVPC

CidrBlock: "10.0.1.0/24"

AvailabilityZone: "us-east-1a"

SubnetB:

Type: "AWS::EC2::Subnet"

Properties:

VpcId: !Ref MyVPC

CidrBlock: "10.0.2.0/24"

AvailabilityZone: "us-east-1b"

3. Configuring security groups

A security group acts as a firewall to control access to Kafka brokers.

Create a security group to allow inbound traffic to your brokers on required ports.

Set up rules to permit only trusted sources (e.g., producers and consumers) to connect.

Port | Protocol | Purpose |

9092 | TCP | Kafka broker access |

2181 | TCP | ZooKeeper access |

Table: Port configuration

4. Creating an Amazon MSK cluster

Steps:

Go to the MSK Dashboard on the AWS Management Console.

Click Create Cluster and select your creation method.

Provide a cluster name, select your previously created VPC and subnets, and specify the broker instance type (e.g., kafka.m5.large).

Choose the number of brokers per AZ to ensure high availability.

Configure logging, monitoring, and storage as required.

Sample code (AWS CLI):

aws kafka create-cluster \

--cluster-name myKafkaCluster \

--broker-node-group-info InstanceType=kafka.m5.large,ClientSubnets=["subnet-123","subnet-456"],SecurityGroups=["sg-789"] \

--kafka-version "2.8.1" \

--number-of-broker-nodes 3

5. Broker authentication and encryption

Encryption at Rest: Enable encryption using AWS KMS-managed keys.

Encryption in Transit: Secure data between brokers, clients, and ZooKeeper nodes with TLS.

Sample configuration:

EncryptionInfo:

EncryptionAtRest:

DataVolumeKMSKeyId: "your-kms-key-id"

EncryptionInTransit:

InCluster: true

ClientBroker: "TLS"

6. Monitoring and Logging

Enable enhanced monitoring to collect detailed Kafka metrics via CloudWatch, and set up broker logs for analysis.

Enhanced Monitoring:

- Enable enhanced monitoring in MSK to access broker-level metrics.

Logging:

- Stream logs to Amazon S3 or Elasticsearch for in-depth analysis.

7. Connecting producers and consumers

Retrieve the bootstrap broker endpoint from the MSK console and use it in client applications.

Regularly monitor MSK clusters via the MSK Dashboard and CloudWatch to analyze broker health, throughput, and latency.

By following these steps, you’ll have a well-configured, secure Amazon MSK cluster that is optimized for high performance and reliability. Make sure to monitor its health and adjust scaling as needed to handle dynamic data traffic.

Wrap-Up

To delve deeper into Amazon MSK and other data streaming technologies, enroll in the Data Engineer Academy courses. Our courses provide comprehensive, hands-on training to help you understand the full capabilities of data streaming tools like MSK and apply them effectively to your projects. Sign up today to gain expert insights, refine your data engineering skills, and transform your streaming architectures into efficient data solutions.